Invented by Page Larry, founder of Google in ranking web pages, ” PageRank works by counting the number and quality of links to a page to determine a rough estimate of how important the website is. The underlying assumption is that more important websites are likely to receive more links from other websites”.

A page that is linked to by many pages with high PageRank receives a high rank itself. algorithm outputs a probability distribution used to represent the likelihood that a person randomly clicking on links will arrive at any particular page. (note pages are the nodes, and links are edges)

For example (from wiki), different nodes has different links pointing to them. However, not only the number of links matter, but also the significance of the node from that link, hence, Node C’s rank is higher than node E.

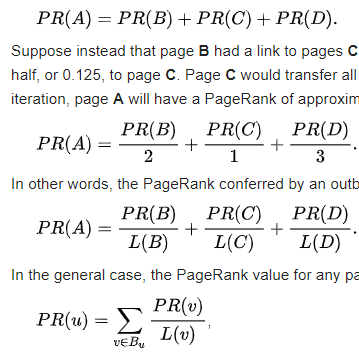

The math behind pagerank is simple as graphed below, assuming there are A, B, C, D four nodes pointing each other.

According to wiki, “the PageRank theory holds that an imaginary surfer who is randomly clicking on links will eventually stop clicking. The probability, at any step, that the person will continue is a damping factor d. Various studies have tested different damping factors, but it is generally assumed that the damping factor will be set around 0.85. ” I am not quite certain if we don’t apply pagerank in page clicking, instead, we apply it in static network of nodes and links, is the damping factor still meaningful?

Below is a python script to carry out this computation.

# Parameter M adjacency matrix where M_i,j represents the link from 'j' to 'i', such that for all 'j'

# sum(i, M_i,j) = 1

# Parameter d damping factor (default value 0.85)

# Parameter eps quadratic error for v (default value 1.0e-8)

# Return v, a vector of ranks such that v_i is the i-th rank from [0, 1]

import numpy as np

def pagerank(M, eps=1.0e-8, d=0.85):

N = M.shape[1]

v = np.random.rand(N, 1)

v = v / np.linalg.norm(v, 1)

last_v = np.ones((N, 1), dtype=np.float32) * 100

while np.linalg.norm(v - last_v, 2) > eps:

last_v = v

v = d * np.matmul(M, v) + (1 - d) / N

return v

M = np.array([[0, 0, 0, 0, 1],

[0.5, 0, 0, 0, 0],

[0.5, 0, 0, 0, 0],

[0, 1, 0.5, 0, 0],

[0, 0, 0.5, 1, 0]])

v = pagerank(M, 0.001, 0.85)

Now we’d like to put this theory into company’s relationship analysis, postulating that ones with higher pagerank – being pointed by other companies with significant and frequency indicate higher return performance.

We will use igraph package. There are several hurdles to overcome to perfectly utilize this tool.

- To transform the dataframe structure to an edge list that Graph class can take in.

- Then we can easily apply Gm.edge_betweenness(), or Gm.pagerank() to compute the score, but how to display the identifiers or vertices? The answer is that igraph maintains an internal mapping from names to vertex IDs, which is automatically updated whenever you add or delete vertices, so a simple g.vs[indexnumber][‘name’] extracts out needed identifiers or names.

Suppliers.head()

su = Suppliers[[‘Company_ID’, ‘Related_Company_ID’,’grade’]]

tuples = [tuple(x) for x in su.values]

Gm = igraph.Graph.TupleList(tuples, directed = True, vertex_name_attr=’name’, edge_attrs = [‘REL_TYPE’])

print(Gm)

nid = Gm.vs[0:len(Gm.degree())][‘name’]

fn = pd.DataFrame({‘pagerank’: pg, ‘nameid’: nid}, index=range(len(pg)))

Added on Nov 18th, 2021, to elaborate various types of Centrality in network problems:

Degree Centrality: number of connection realized upon the total potential connections

Betweenness Centrality: besides the ego node, how many potential pairs are there, this becomes the denominator, then look at the denominator, which is basically how many pairs can be realized by place the ego node in between.

Closeness Centrality: the numerator is fixed as the total nodes substracting the ego node, denominator is to sum up the distance between ego nodes to every other node in the network

Eigenvector Centrality: the most complicated and elegant way; first come up with the matrix A composed of n(n is the number of nodes) column and n rows, if the two are connected, populate the cell as 1, otherwise 0. So each one’s centrality s the linear combination of your neighbor’s centrality. The math just boils down to compute the eigenvalues of this matrix.

What we calculated is called pagerank centrality, Eigenvector centrality is undirected, and PageRank applies for directed network as is detailed above. However, PageRank uses the indegree as the main measure to estimate the influence level, thus it turns to be a very specific case or variant of Eigenvector centrality.