Referring to this portal by Apple:

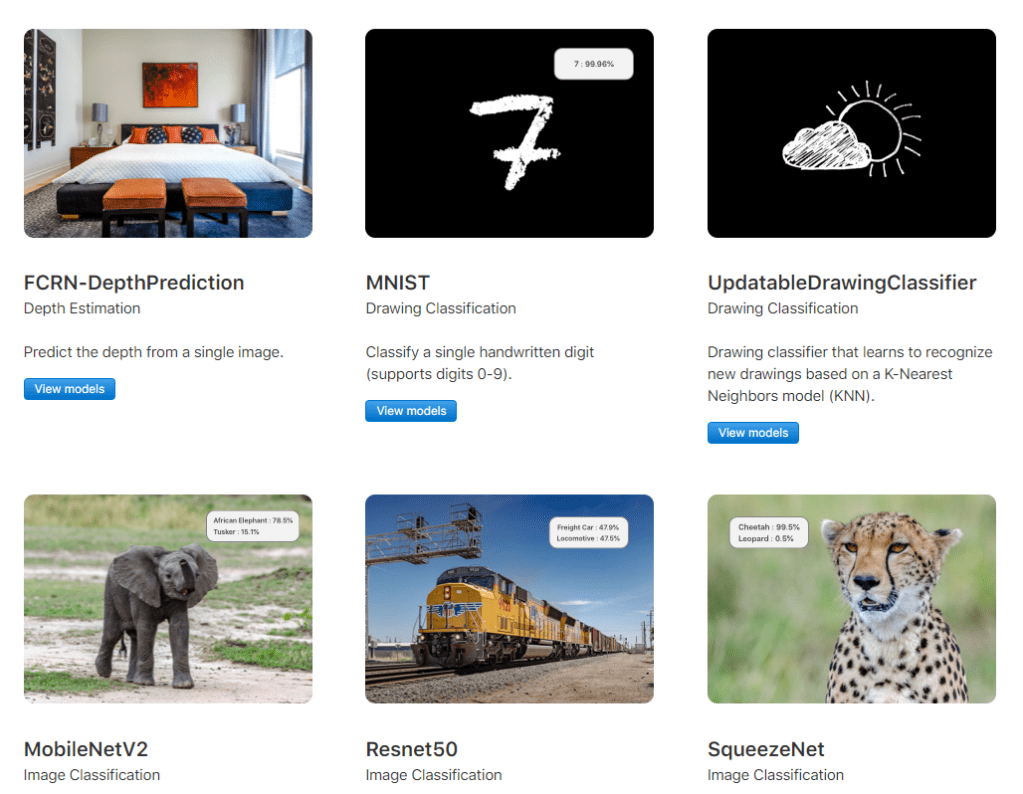

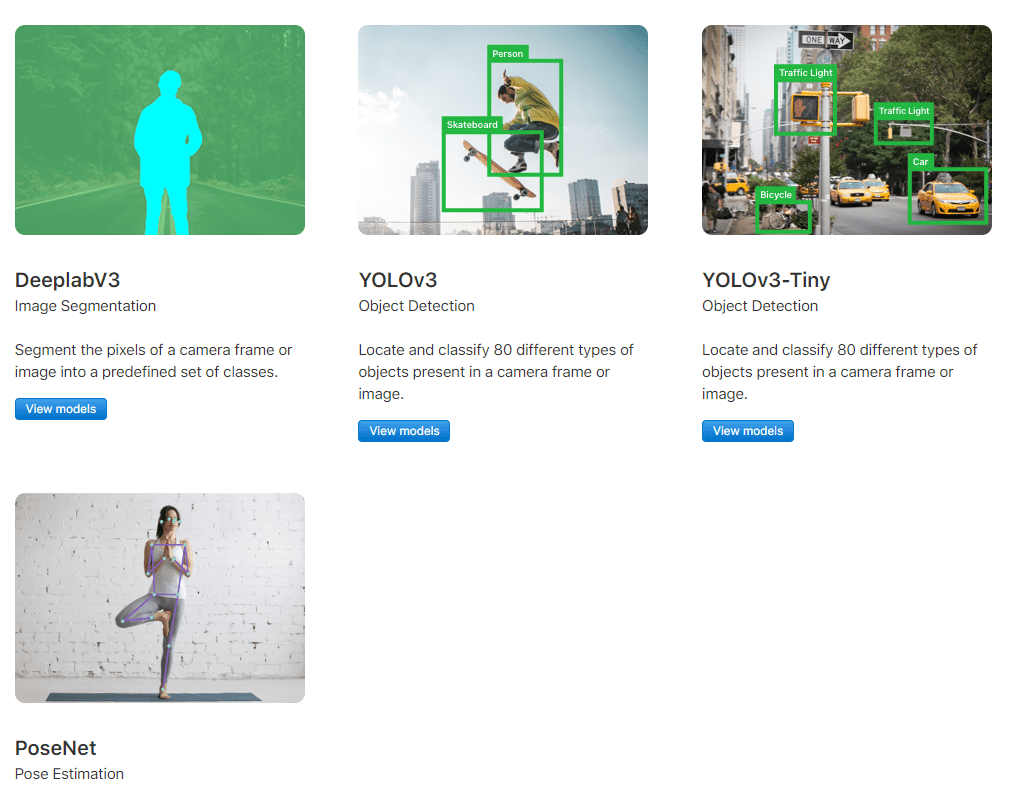

There are so far only two categories: Text and Image.

FCRN-Depth Prediction address the problem of estimating the depth of a scene given a single RGB image. CNN is used, reverse Huber loss functiion. by Iro Laina et al in 2016.

The second is famous MNIST handwritten digits recognition by NYU and Google.

…

It’s not as comprehensive as offered by Google Tensorflow or Facebook’s but seems Apple keeps growing the offerings.

Next, I’d go through an entire procedure of how to create an app based on core ML.

So the IDE is called Xcode developed by Apple as core ML. The script of a simple object recognition is as follows:

//

// ViewController.swift

// SmartCameraLBTA

//

// Created by Jason on 8/12/20.

// Copyright © 2020 Grand Wazoo. All rights reserved.

//

import AVKit

import UIKit

import Vision

class ViewController: UIViewController, AVCaptureVideoDataOutputSampleBufferDelegate {

@IBOutlet var cameraView: UIView!

@IBOutlet var modelLabel: UILabel!

@IBOutlet var descriptionLabel: UILabel!

override func viewDidLoad() {

super.viewDidLoad()

// Start camera here

let captureSession = AVCaptureSession()

captureSession.sessionPreset = .photo

guard let captureDevice = AVCaptureDevice.default(for: .video) else { return }

guard let input = try? AVCaptureDeviceInput(device: captureDevice) else { return }

captureSession.addInput(input)

captureSession.startRunning()

let previewLayer = AVCaptureVideoPreviewLayer(session: captureSession)

cameraView.layer.addSublayer(previewLayer)

previewLayer.frame = view.frame

let dataOutput = AVCaptureVideoDataOutput()

dataOutput.setSampleBufferDelegate(self, queue: DispatchQueue(label: "videoQueue"))

captureSession.addOutput(dataOutput)

}

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

guard let pixelBuffer: CVPixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer) else { return }

// guard let model1 = try? VNCoreMLModel(for: SqueezeNet().model) else { return }

guard let model2 = try? VNCoreMLModel(for: Resnet50().model) else { return }

let request = VNCoreMLRequest(model: model2) { [unowned self] (finishedReq, err) in

DispatchQueue.main.async {

guard let results = finishedReq.results as? [VNClassificationObservation] else { return }

guard let f = results.first else { return }

self.descriptionLabel.text = "\(f.identifier)"

self.modelLabel.text = "Confidence: \(f.confidence)"

}

}

try? VNImageRequestHandler(cvPixelBuffer: pixelBuffer, options: [:]).perform([request])

}

}

source codes are hosted on github, then connect to iPhone so the script is converted into machine codes and can be used on the phone. For the app to be commercialized, we have to have an Apple Developer account, upload for their approval and then hosted in app store for people across the world to install.