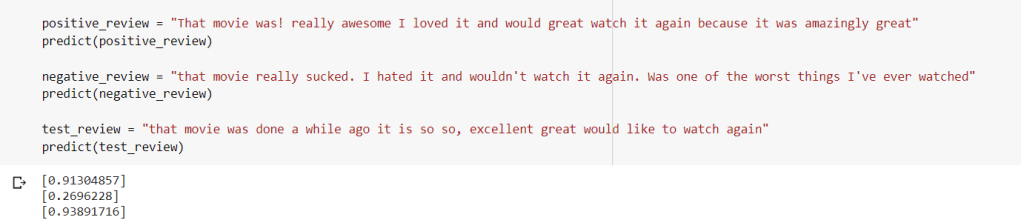

This is the version from “tedwithtech”, the performance is not as good as I expected (a test line “that movie was done a while ago it is amazingly positive, excellent great remarkable” returns only 0.3 score, as low as to be categorized as negative. It is a great toy case to do trouble shooting and figure out improving it so in the next hydrogen theme judgment project, I can apply the same.

First, the dataset of imdb movie reviews are provided by keras already (from keras.datasets import imdb). How large is this set of data? There are 50000 rows of data splitting to half train_dataset and half test_dataset.

train_dataset = train_dataset.shuffle(BUFFER_SIZE).batch(BATCH_SIZE).prefetch(tf.data.AUTOTUNE) BUFFER_SIZE is set to be 10000, and BATCH_SIZE 64. tf.data.AUTOTUNE is?

In keras carried imdb data, encoding is done easily with

word_index = imdb.get_word_index()

def encode_text(text): tokens = keras.preprocessing.text.text_to_word_sequence(text) tokens = [word_index[word] if word in word_index else 0 for word in tokens] return sequence.pad_sequences([tokens], MAXLEN)[0]

in tfds imdb_reviews, specific steps are taken as

VOCAB_SIZE=1000

encoder = tf.keras.layers.experimental.preprocessing.TextVectorization(

max_tokens=VOCAB_SIZE)

encoder.adapt(train_dataset.map(lambda text, label: text))

Note the .adapt method sets vocabulary. while in the quick keras package, upstream or texvectorization, adapt is done in text_to_word_sequence, and imdb_get_word_index().

(train_data, train_labels), (test_data, test_labels) = imdb.load_data(num_words = VOCAB_SIZE), the smaller integers represent more common words, so deciding vocab_size = 88584 here means to remove least commonly used words beyond sequence order of 88584.

train_data = sequence.pad_sequences(train_data, MAXLEN), maxlen is set to be 250, again, it’s the window size when cursor sequentially move forward I believe. If the total text size is smaller than maxlen, then padding or pad_sequences is needed.

tf.keras.layers.Embedding(VOCAB_SIZE, 32), 32 is the embedding parameters, important. For larger and complex corpus, it can be tuned up to 64 or even 128.

tf.keras.layers.LSTM(32), simple LSTM model, tried to replace it with bidirectional LSTM as tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(32)), it took much longer time to train but the result is still not satisfying.

tf.keras.layers.Dense(1, activation=”relu”) activation can be sigmoid and relu, the key difference is the sigmoid squeeze the range from -1 to +1, while relu from 0 to 1, many cases relu is more suitable.

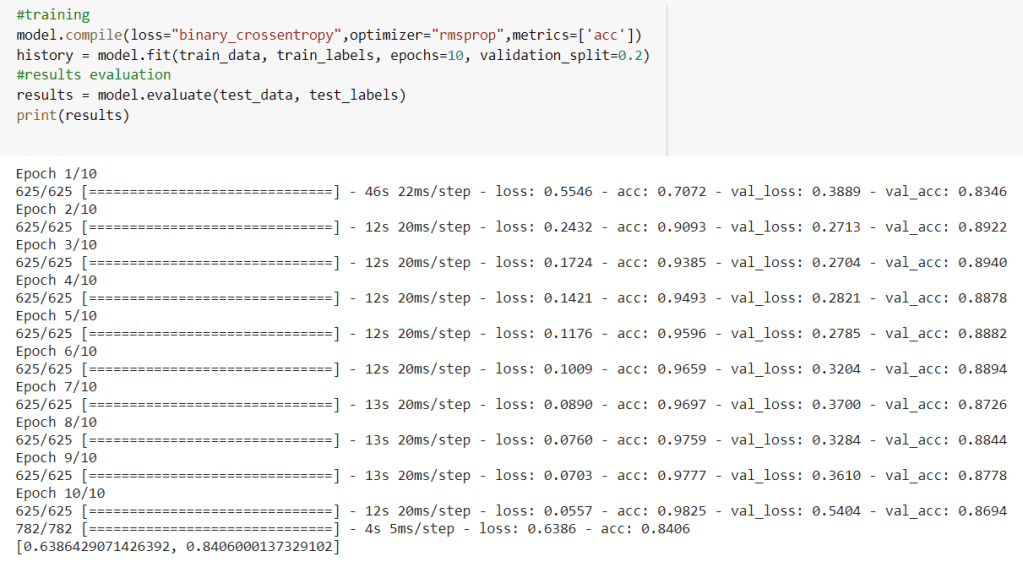

model.compile(loss=”binary_crossentropy”,optimizer=”rmsprop”,metrics=[‘acc’]), there are various loss functions and optimizer.

model.fit(train_data, train_labels, epochs=10, validation_split=0.2), epochs is the number of training performed. from the result below one can see that the model is overfitting, the accuracy was high to 98% in training but the validation cannot go above it, stayting at 87%.

For the below random testing text, I fount it unstable and did a lousy job in predicting

With a better understanding of how this imdb data look like and basic model setting, we’d go deeper to finetune and improve the accuracy of RNN. STUCK, so go back to his original clip to jog the memory what is his comment on the lousy prediction. So it turns out Tim demoed how he ad hoc change the sample text and observe large variance of prediction score without offering any insight.

Through stackoverflow, I collected several good suggestion. I will explore in new blogs. Consistent to what I figured myself, applying LSA and LDA is necessary, then Word2Vec and Doc2Vec. Also, choose a proper model from a spectrum of Support Vector Machines (SVMs), Naive Bayes and Decision Trees , Maximum Entropy…