It is not as traditional IDE like VS code, it allows system distribution, or parallel processing, ideal for big data analysis in a real large scale. Matei Zaharia at UC Berkeley’s AMPLab in 2009, open sourced it has over 1000 contributors in 2015, making it one of the most active open source big data projects.

Quick dip into a Youtuber(https://www.youtube.com/watch?v=u8mz569tv5I) to learn the gist of it. He demoed the installation Docker Desktop to launch Apache Spark, Apache Zeppelin(visual tool, like JupyterNotebook for Python).

The UI looks as below:

The foundation is Spark Core, providing distributed task dispatching through an interface such as Python, scala centered on the RDD abstraction. From Wiki, a typical example of RDD-centric functional programming is the following Scala program that computes the frequencies of all words occurring in a set of text files and prints the most common ones. Each map, flatMap (a variant of map) and reduceByKey takes an anonymous function that performs a simple operation on a single data item (or a pair of items), and applies its argument to transform an RDD into a new RDD.

val conf = new SparkConf().setAppName(“wiki_test”) // create a spark config object

val sc = new SparkContext(conf) // Create a spark context

val data = sc.textFile(“/path/to/somedir”) // Read files from “somedir” into an RDD of (filename, content) pairs.

val tokens = data.flatMap(_.split(” “)) // Split each file into a list of tokens (words).

val wordFreq = tokens.map((_, 1)).reduceByKey(_ + _) // Add a count of one to each token, then sum the counts per word type.

wordFreq.sortBy(s => -s._2).map(x => (x._2, x._1)).top(10) // Get the top 10 words. Swap word and count to sort by count.

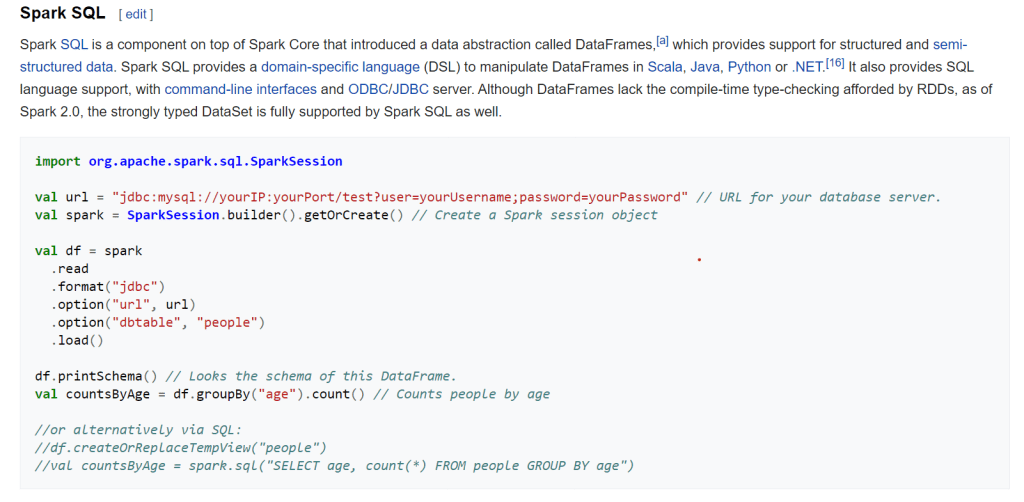

And there is the component of Spark SQL