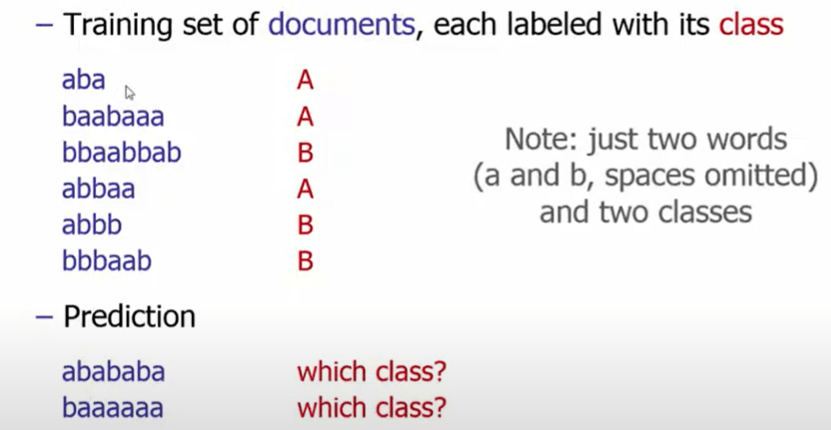

Classification, the ultimate goal we would try to accomplish in all sorts of the area such as movies, music and companies/stocks. So even in real application it could be super messy, it is always easier to start from a simple toy kind of example:

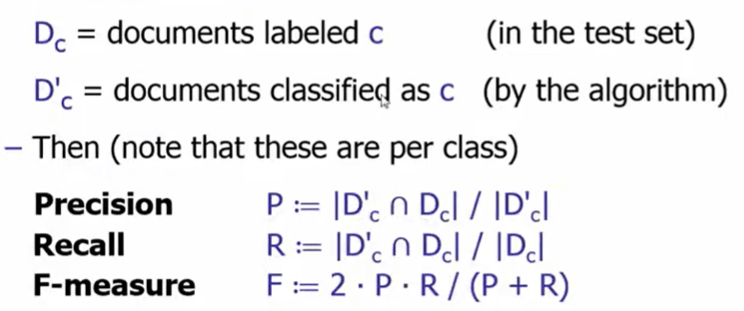

To set the tone, we define quality measuring metrics beforehand

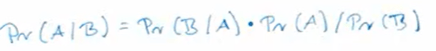

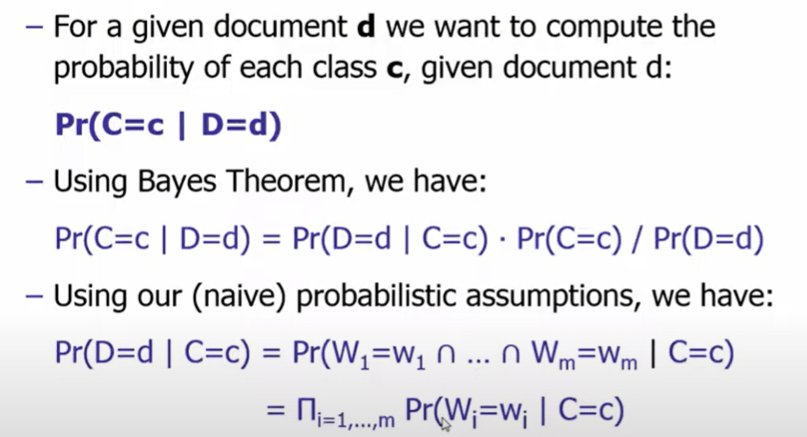

And warm up the understanding of Naive Bayes theory, which is as simple as to get the probability of an intersection on the condition of one subset

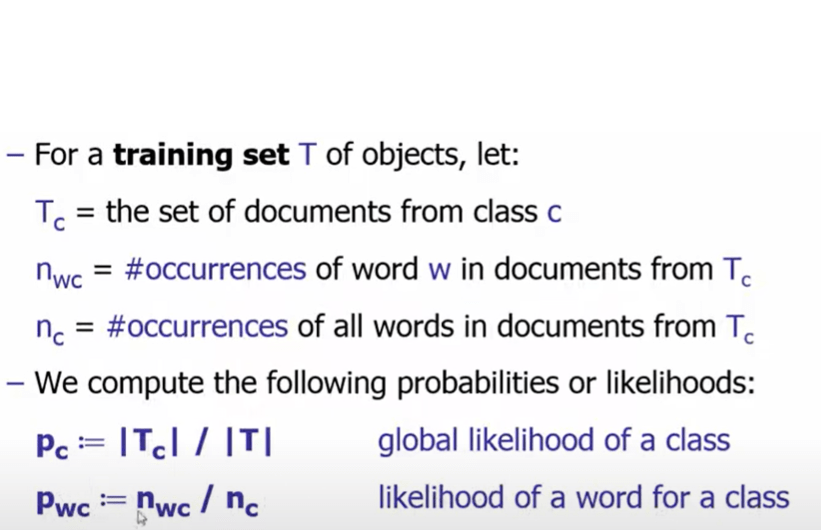

It’s astounding – as much as how eigenvector decomposition in figuring out words relationship in term-document matrix – to then learn how applying this Bayes probability theory helps solve the classification problem in the most messy textual subjects. here is how:

Now to apply this in that toy example, we get

once grasped the idea in actual application we will come across issues such as a word is missing or a classification is missing in training data, numerical stability issue when there are millions of data points that is tiny to the decimal places that computer can not handle…