prompting is essential. good examples are collected here:

from a more systematic prompt engineer vedio:

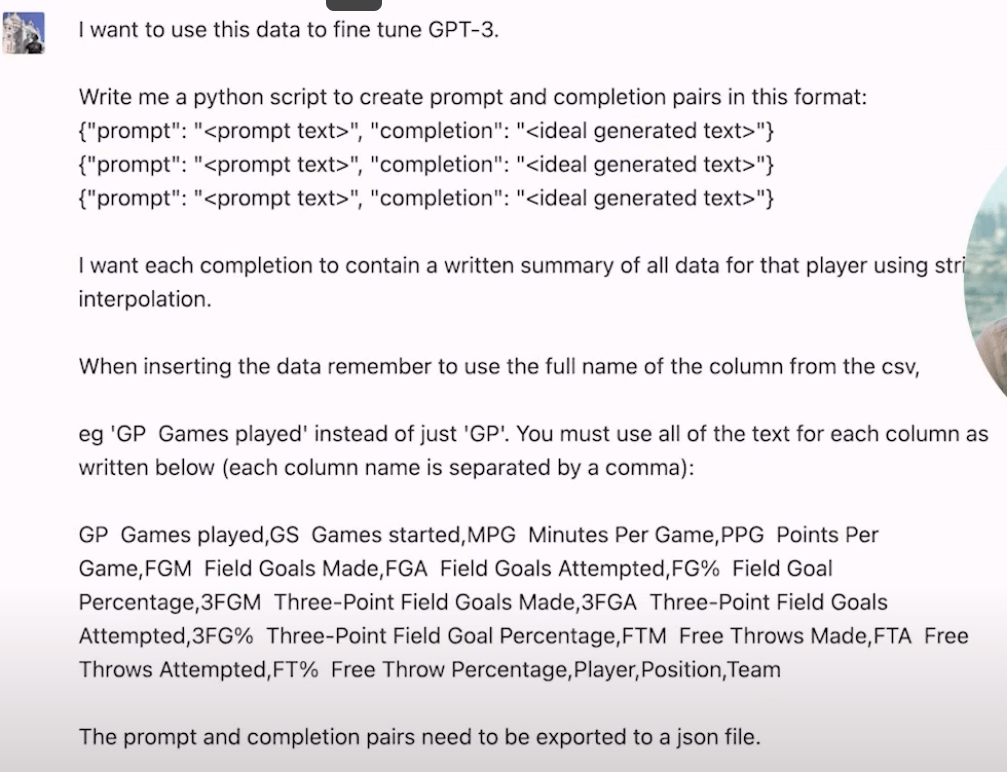

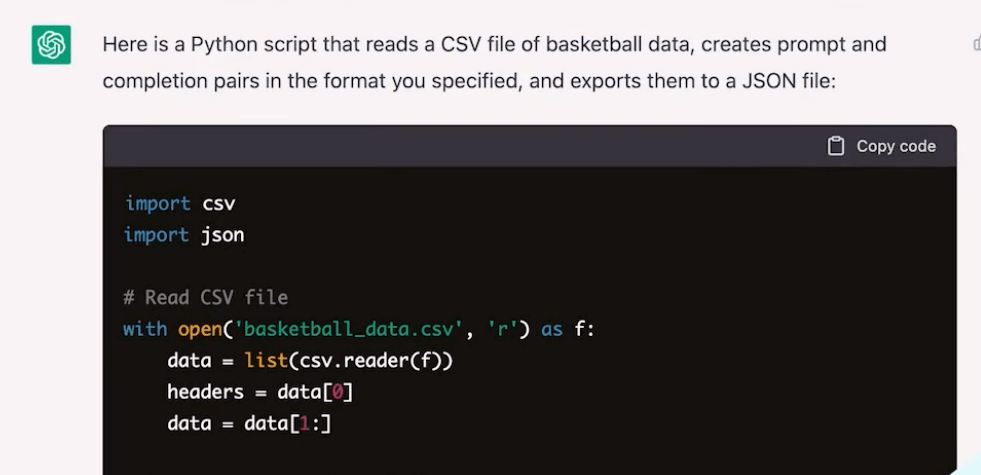

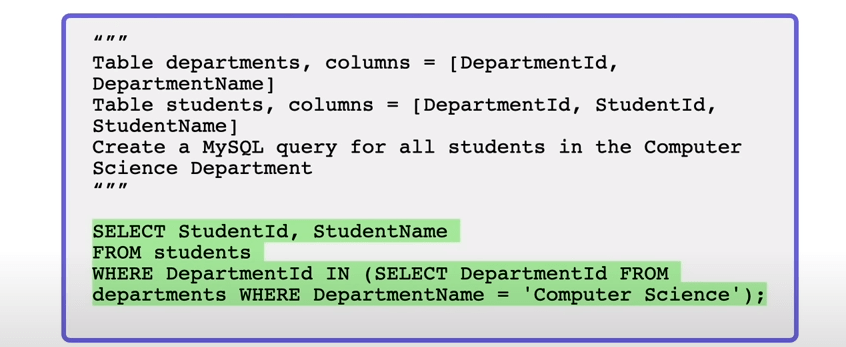

code generation sample 2

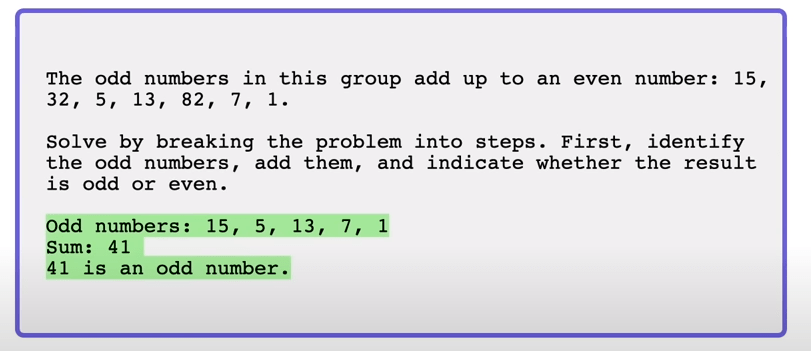

GPT-4 compared to chatGPT is particularly better at reasoning, the prompt sample is

Next, moving to more advanced prompt engineering:

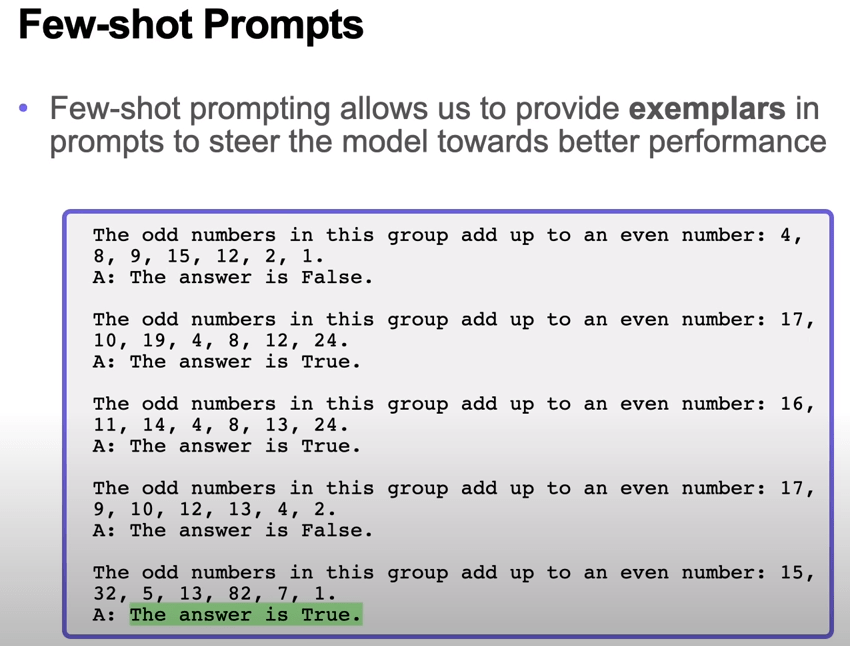

in context learning

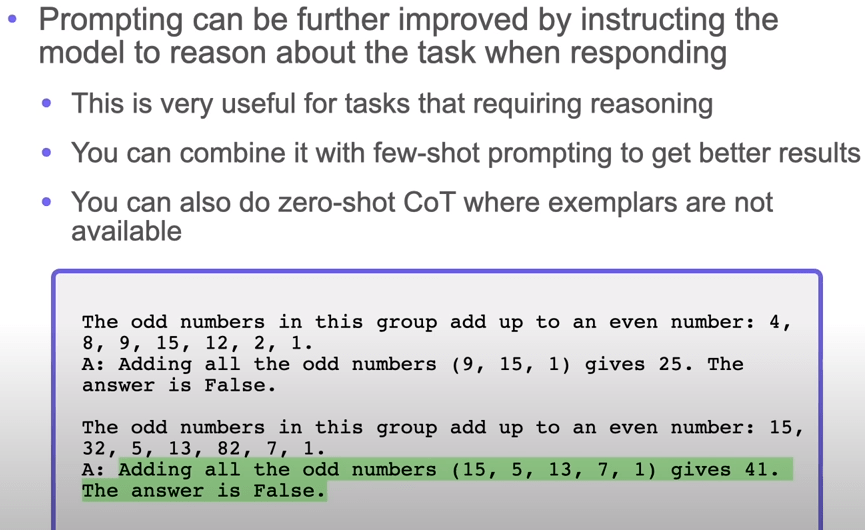

CoT, chain of thought prompting

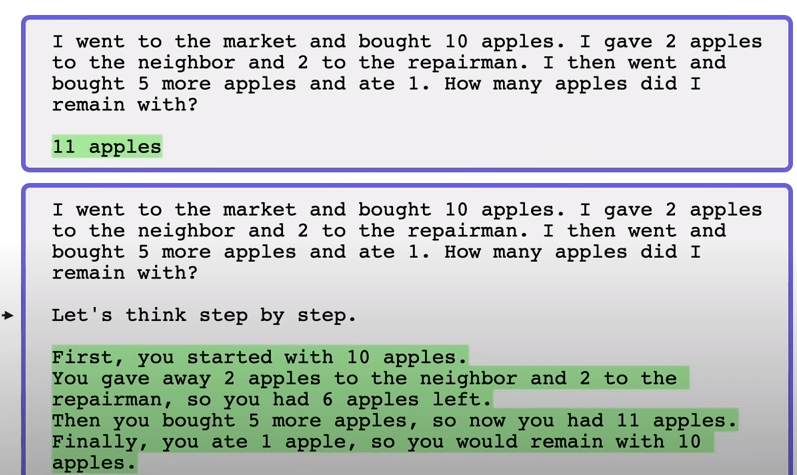

Zero-shot prompting involve adding “let’s think step by step”.

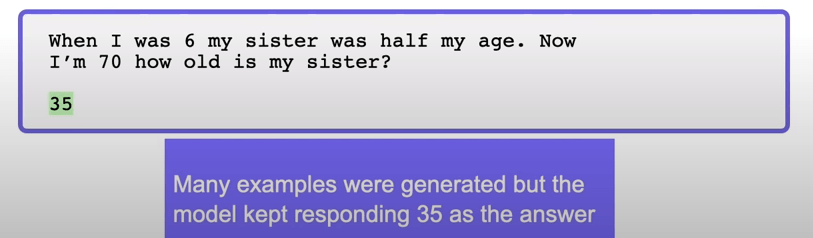

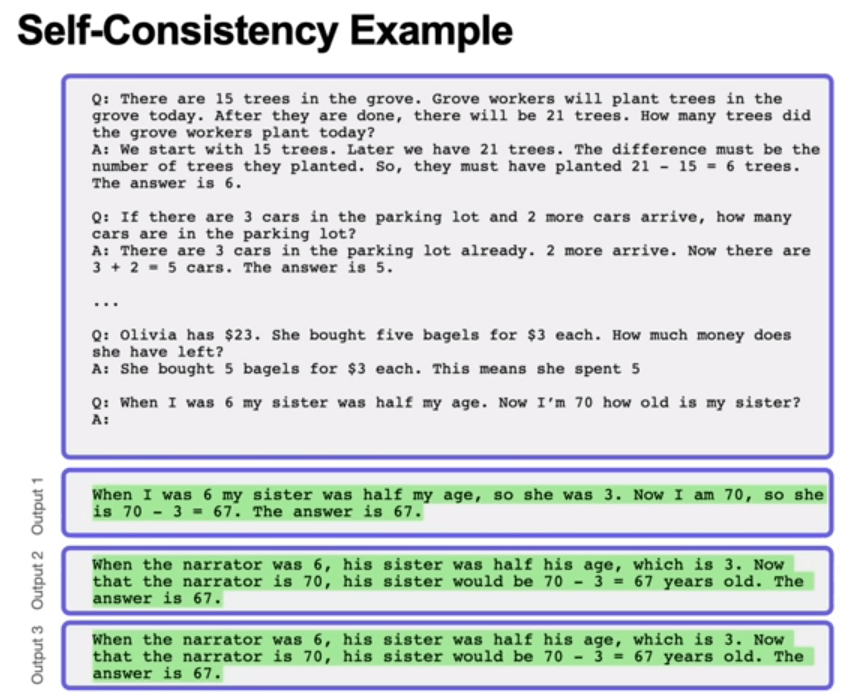

Self-consistency has an interesting example on age reasoning:

After applying smart/self-consistency prompting, the answer can be improved:

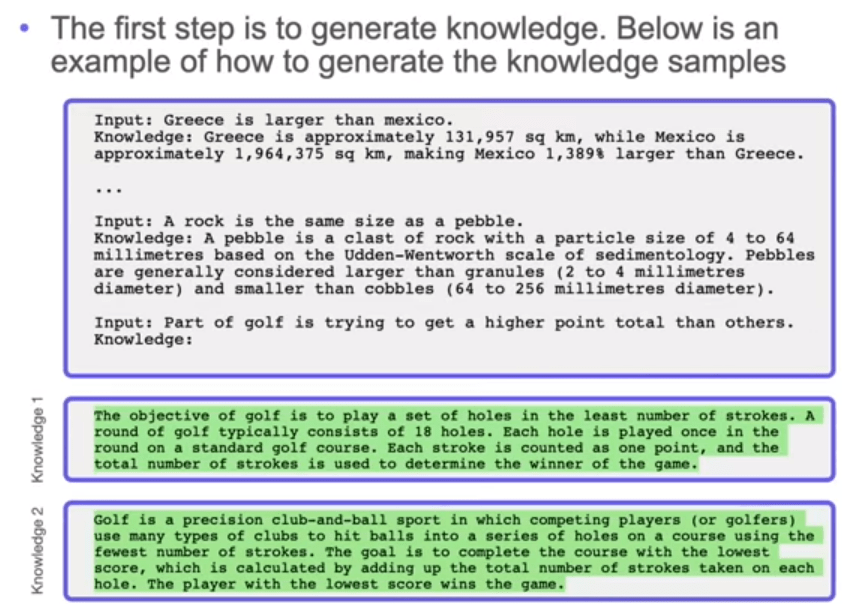

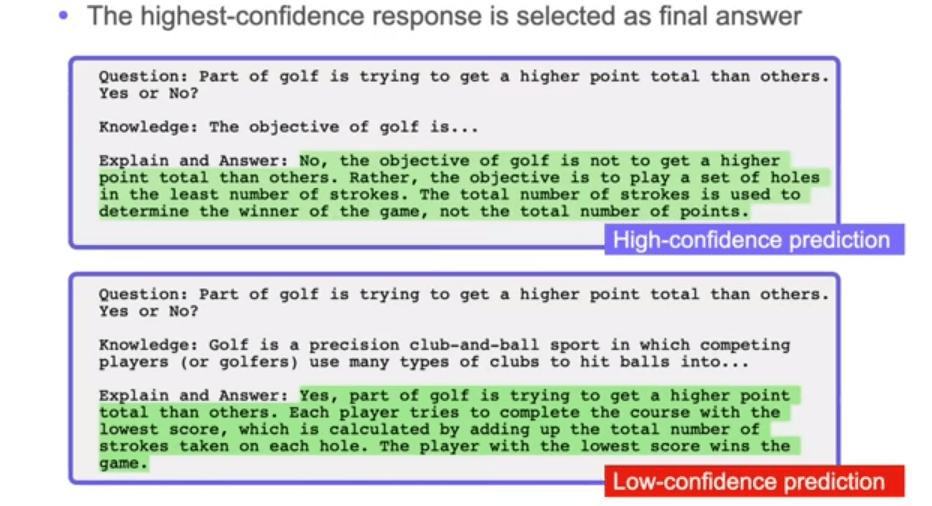

Generate knowledge prompting:

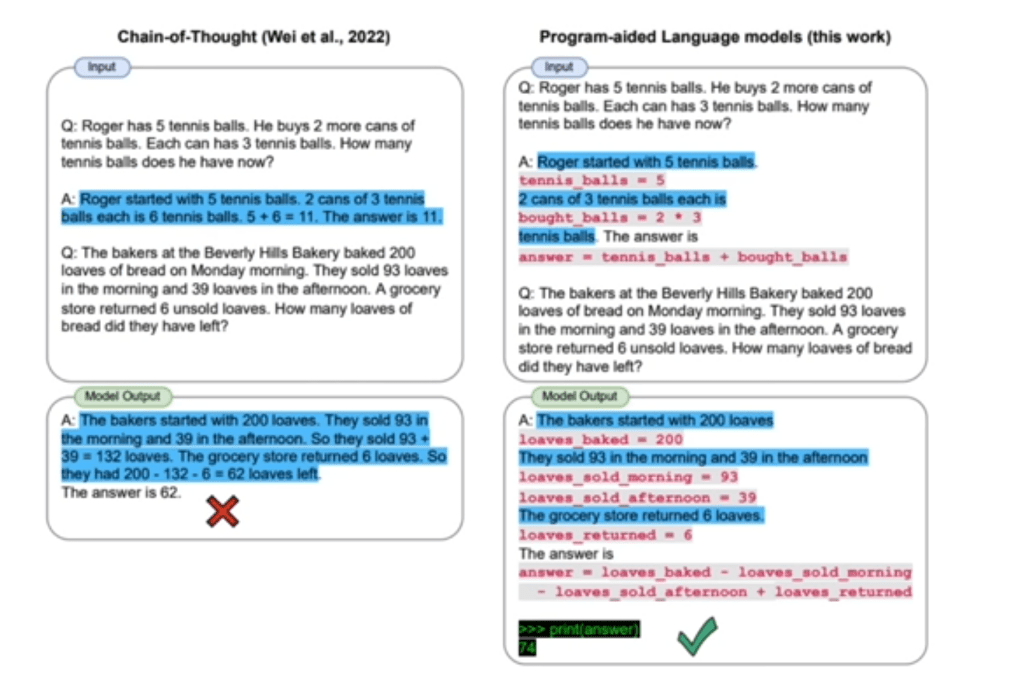

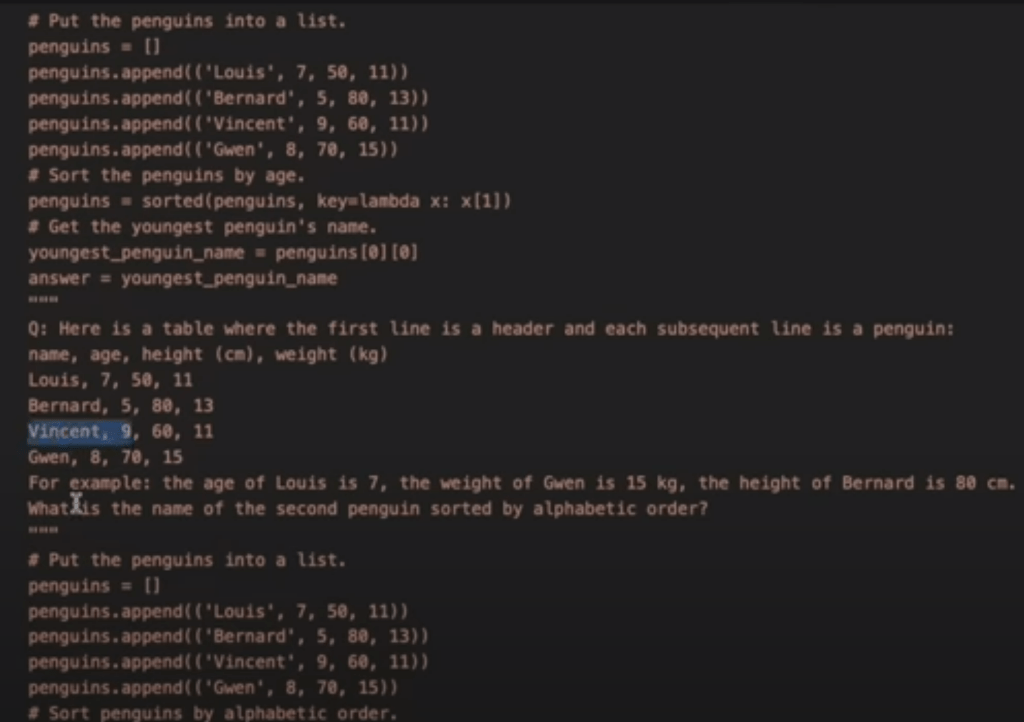

program-aided language model such as chain of thought prompting is to steer models to perform better at complex reasoning tasks, it can be augmented by program-aided Language models to generate intermediate reasoning steps.

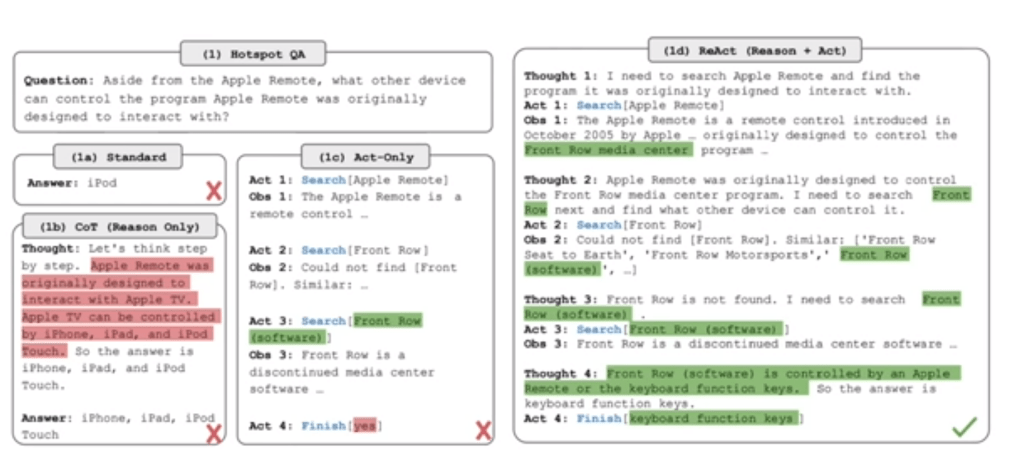

Further combining: ReAct

prompt include demoing how to solve problem such as an extreme case like below:

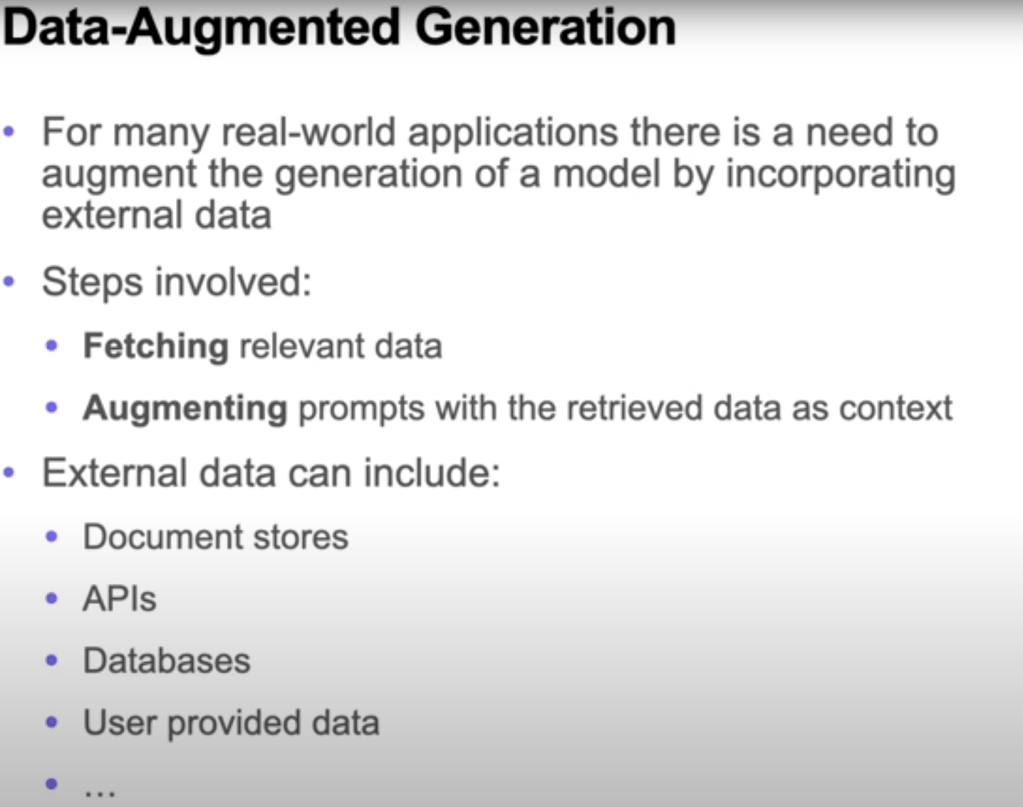

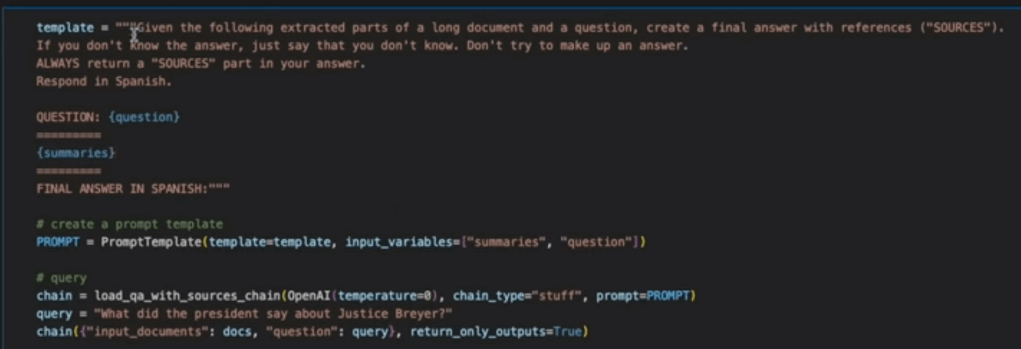

For real applications which would explode in near future, data-augmented generation would be it!