There are Direct methods for solving eigenvalue and eigenvector problems provide exact solutions (within numerical precision limits) without relying on iterative techniques. Several direct methods exist, with the two most common being:

numpy library already provides algorithm to solve it by one line: eigenvalues, eigenvectors = np.linalg.eig(A). It combines the QR method and optimization:

# QR method for matrix solving eigen problem

# Define a sample matrix

A = np.array([[6, 2], [2, 3]])

def qr_factorization_eigen(A, max_iter=100, tol=1e-6):

"""

Find eigenvalues of A using the QR factorization method.

Parameters:

- A: square matrix.

- max_iter: maximum number of iterations.

- tol: convergence tolerance.

Returns:

- Diagonal of A after max_iter iterations (approximated eigenvalues).

"""

for _ in range(max_iter):

Q, R = np.linalg.qr(A) # QR factorization

A = np.dot(R, Q) # Compute A' = RQ

# Check for convergence (off-diagonal elements close to zero)

off_diagonal_sum = np.sum(np.abs(A)) - np.sum(np.abs(np.diag(A)))

if off_diagonal_sum < tol:

break

return np.diag(A)

# Apply the QR factorization method on matrix A

eigenvalues_qr = qr_factorization_eigen(A)

eigenvalues_qr

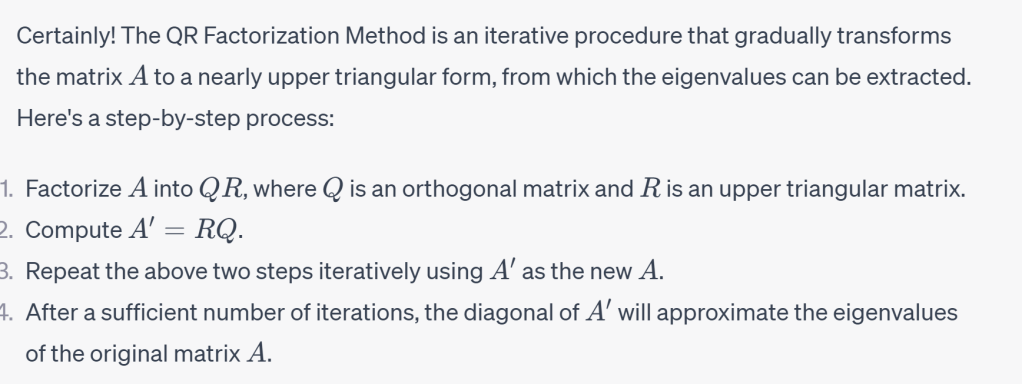

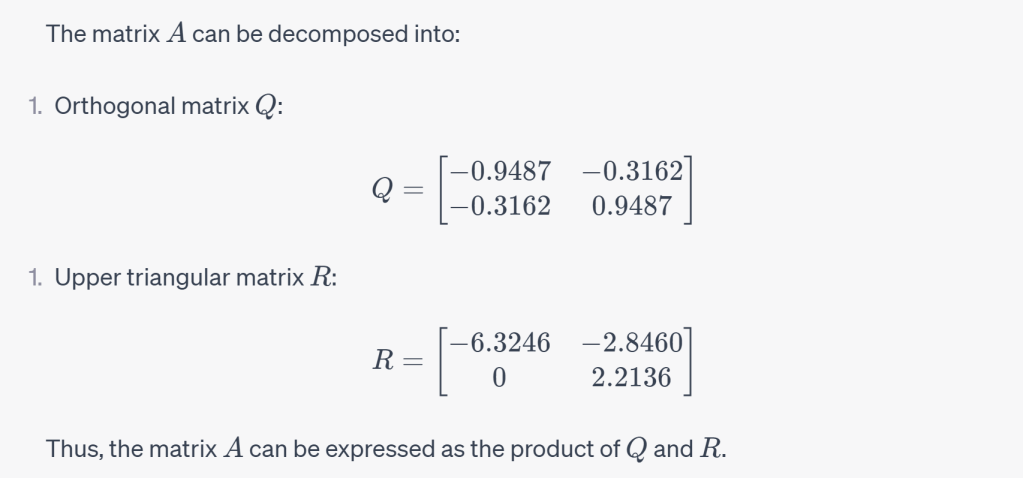

Note The process of decomposing matrix A into a product of an orthogonal matrix Q and an upper triangular matrix R is known as QR factorization (or QR decomposition). Here’s how it’s done: Gram-Schmidt Process:

To summarize, the flipping of Q and R in the QR eigenvalue method is a strategic move to make the matrix converge to a triangular form, revealing the eigenvalues on its diagonal. This is a result of the properties of matrix multiplication and eigenvalues, and how they interact in this specific iterative process

TBC in hit the core of why flipping Q, R to get A’, then iterate to arrive the eigen solution?

QR decomposition to get the orthornormal matrix Q and upper triangle matrix R is very useful in solving least square linear system problem.