Data Processing/cleansing is essential, the goal is to make it stationary so we can fit to ARMA, ARCH etc. models. the tricks include subtracting, dividing standard deviation, difference to adjust trending, then after the stats analysis, we also need to covert these data points back to what we originally try to estimate, the plan is

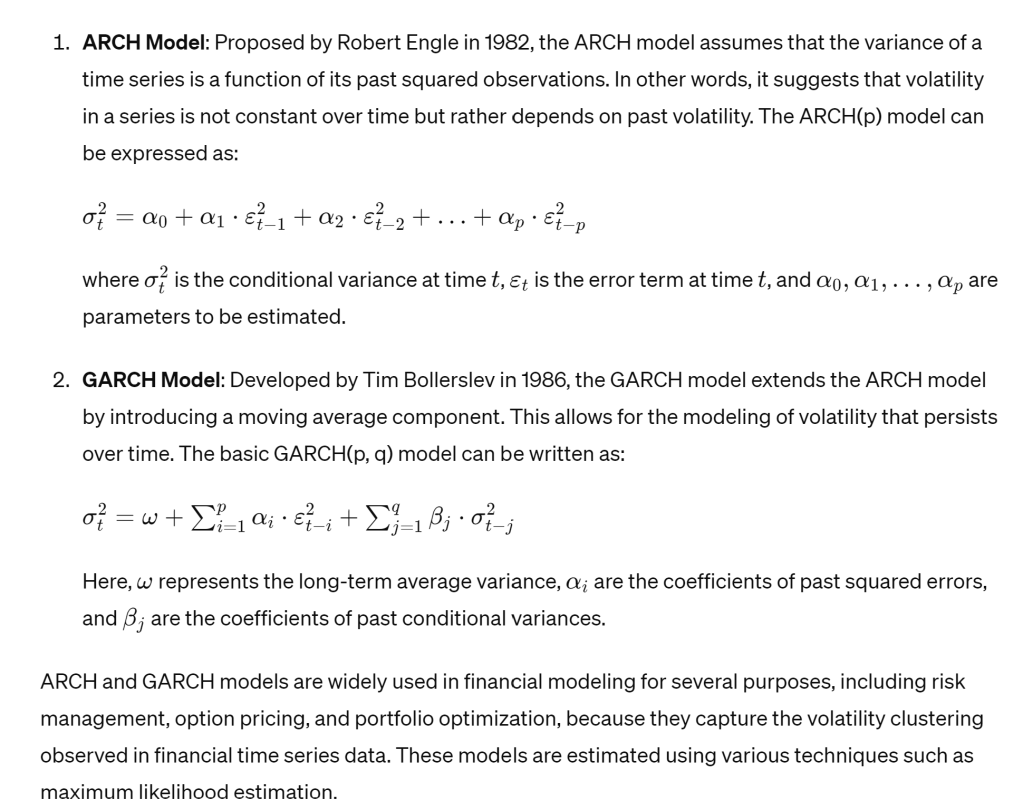

ARCH and GARCH, let chatGPT explain:

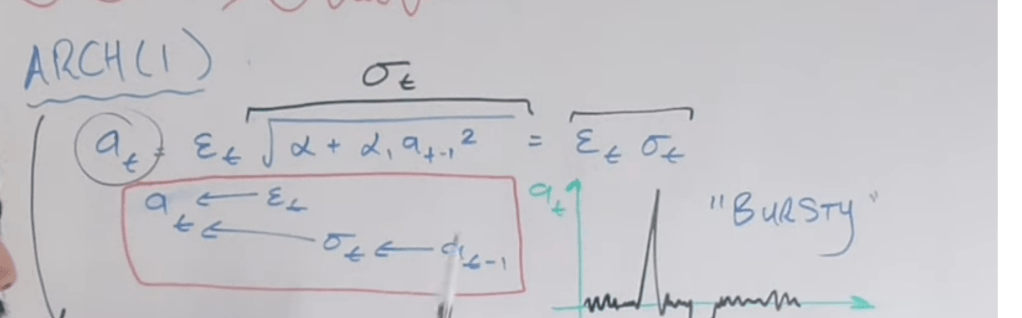

However, it’s not as clear and intuitive as per Rivikmath

While GARCH G means generalized is to take care of or smooth out those bursty jumps:

it’s doing a good job in predicting volatility when use rolling forecast origin too.

Next touch on the important VAR Vector AutoRegression. it’s not only referencing itself to formulate estimate/prediction of future, but also parallelly other time series:

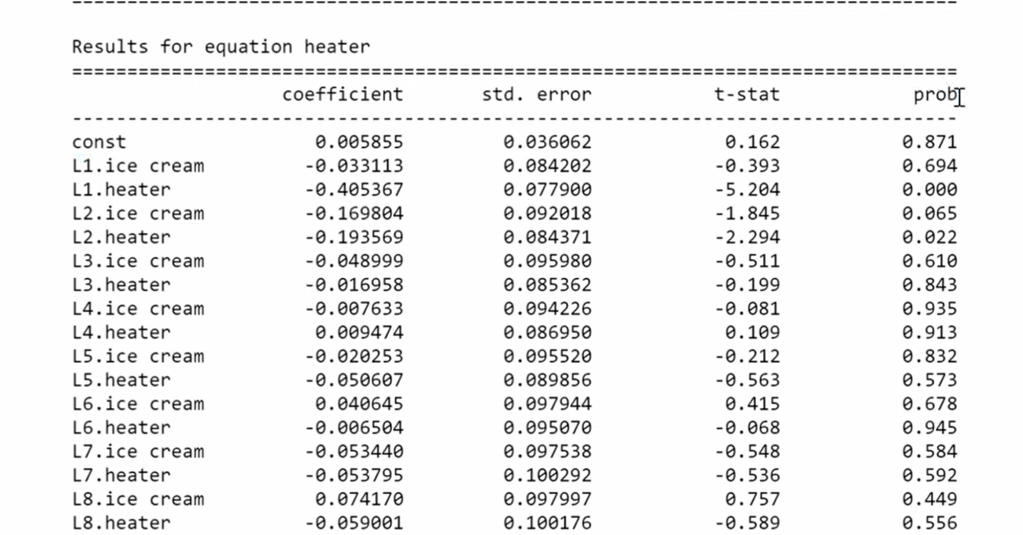

It’s easy to feed into the VAR model but how to interpret it:

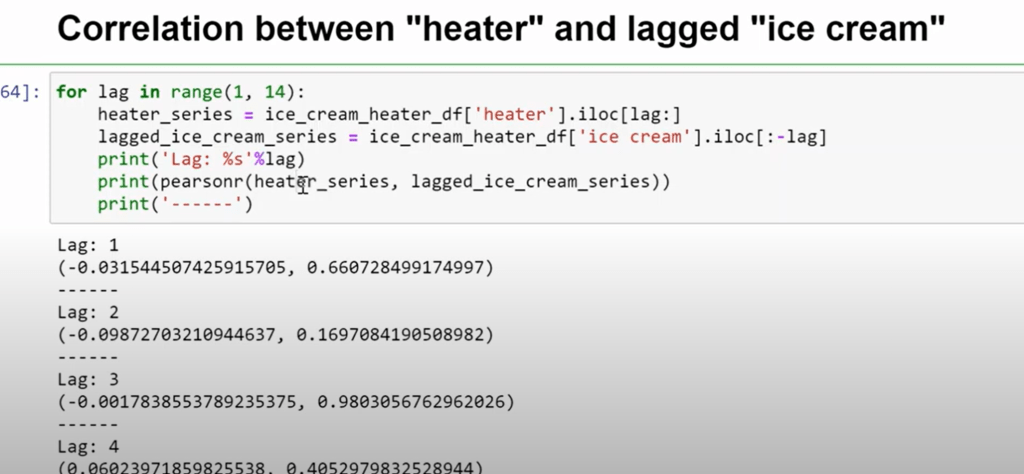

in doing VAR, only tricky part is to figure out parameters for the cross referenced time series, in his demo, he used correlation between heater and lagged ice cream of 13 lags experimentally.

VAR can be used to conduct Granger Causality analysis, to determine whether adding lagged values of one variable improves the forecast of the other variable. If it does, then there is evidence of Granger causality from the first variable to the second. It’s important to note that this causality doesn’t mean in reality there IS cause-effect logic, just the statistical predictive result or fact.