Perception in ROS refers to the process of enabling a robot to understand its environment by gathering and interpreting sensor data. It involves the use of various sensors such as cameras, LiDAR, ultrasonic sensors, and more to perceive the surroundings, detect objects, map the environment, and interpret scenes for decision-making and interaction.

there are perception-related nodes and libraries include:

Depth Image Processing: ROS packages like depth_image_proc help process depth images from sensors like depth cameras or stereo vision systems.

OpenCV: A widely-used library for image processing and computer vision, often integrated with ROS for tasks like feature detection, image filtering, and object recognition.

PCL (Point Cloud Library): A library for processing 3D point cloud data, often used with LiDAR and stereo camera data to create 3D maps and perform object recognition or environmental reconstruction.

It’s crucial to have perception without which, the robot can not perceive the environment and manipulate as instructed.

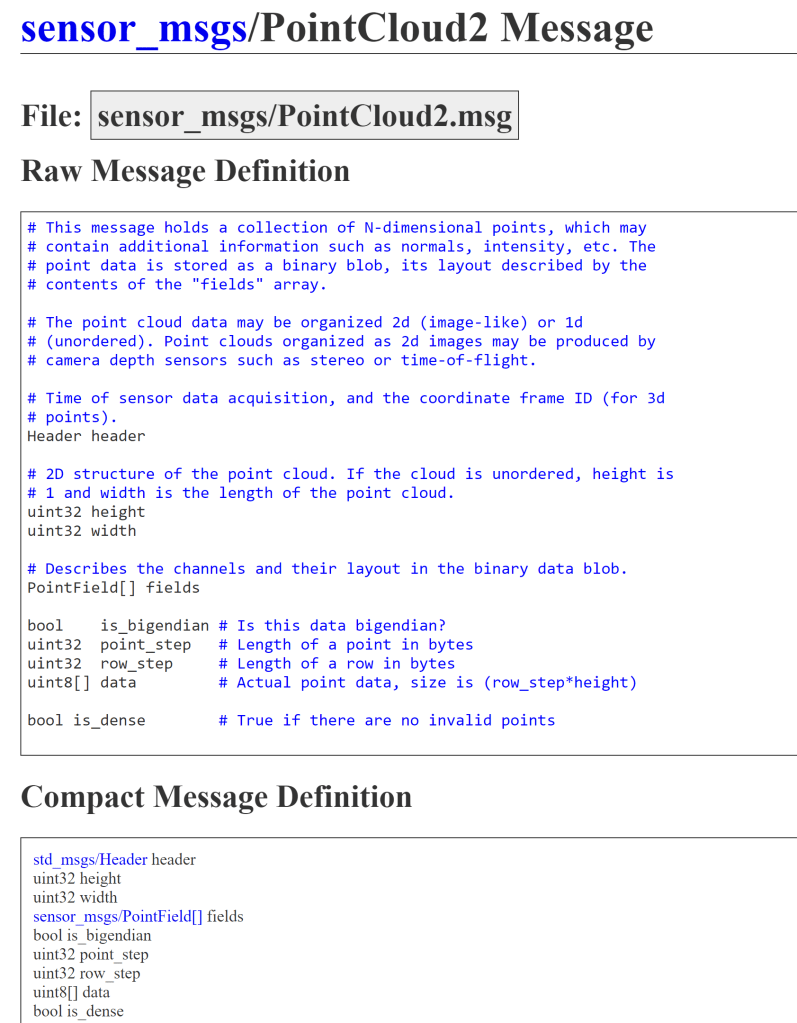

In the exercise provided by ROS tutorial, we’ll be using 3D point cloud data from a common Kinect-style sensor.

here is what the point cloud data

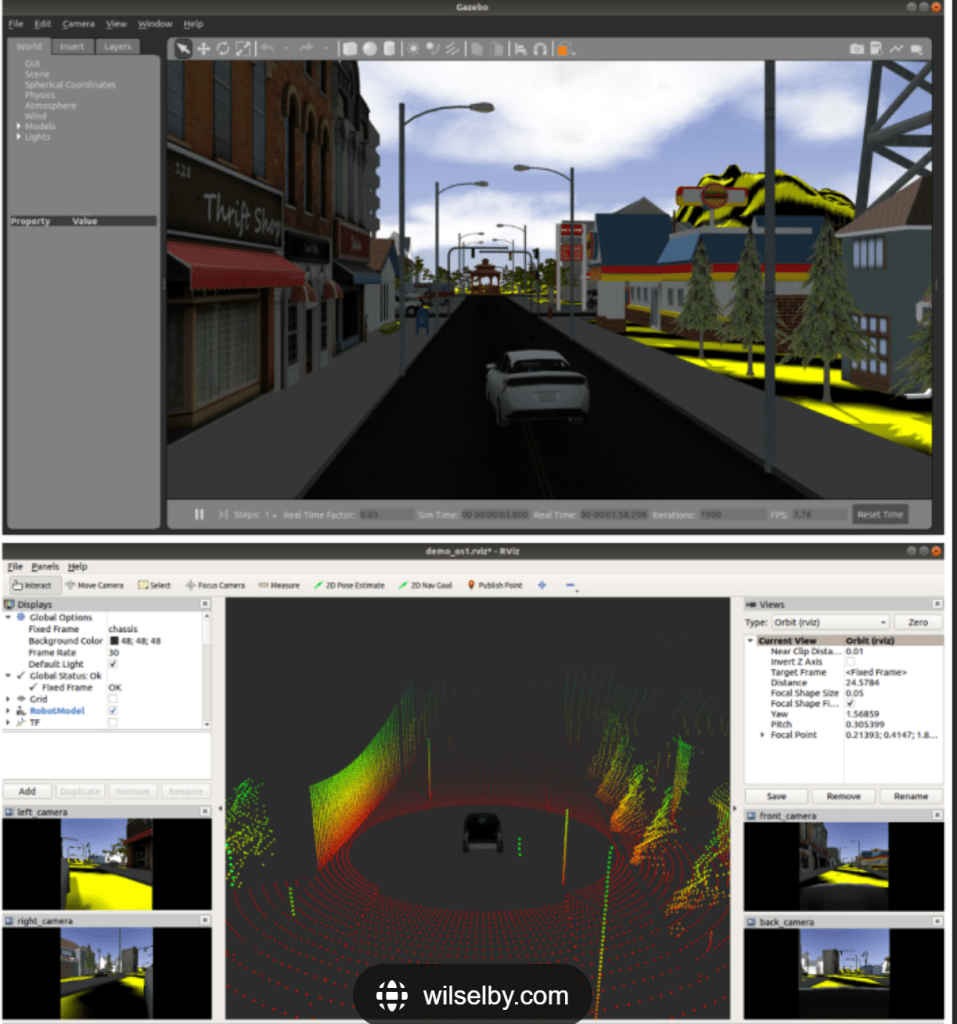

Should be able to view in Rviz ,and There are over 140 command line tools available.

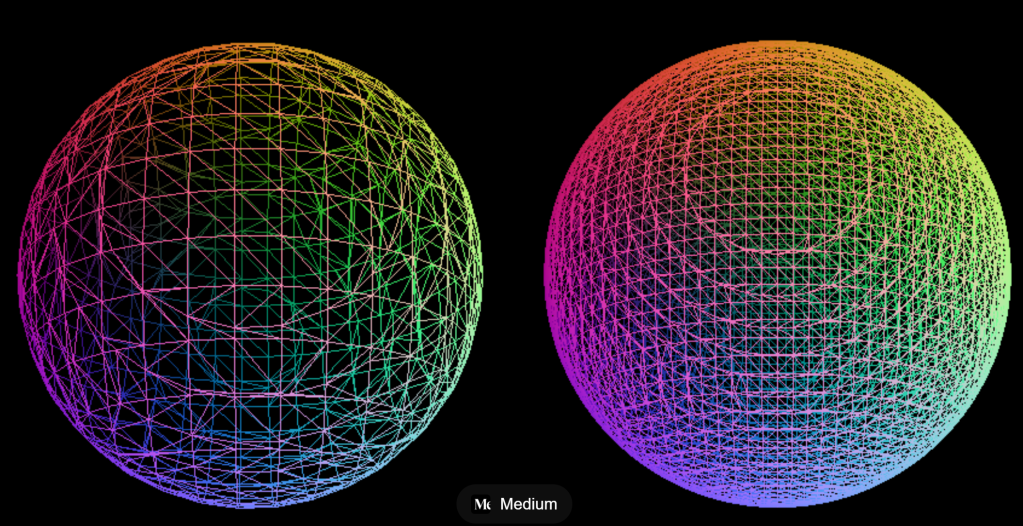

Additionally, we can Mesh a point cloud using the marching cubes reconstruction. follow steps and below image is from medium: Voxel to Mesh Conversion: Marching Cube Algorithm