The recent MCP issued by Anthropic is reminiscent of the role that ODBC (Open Database Connectivity) played for databases in the 1990s: making connectivity simpler and more consistent.

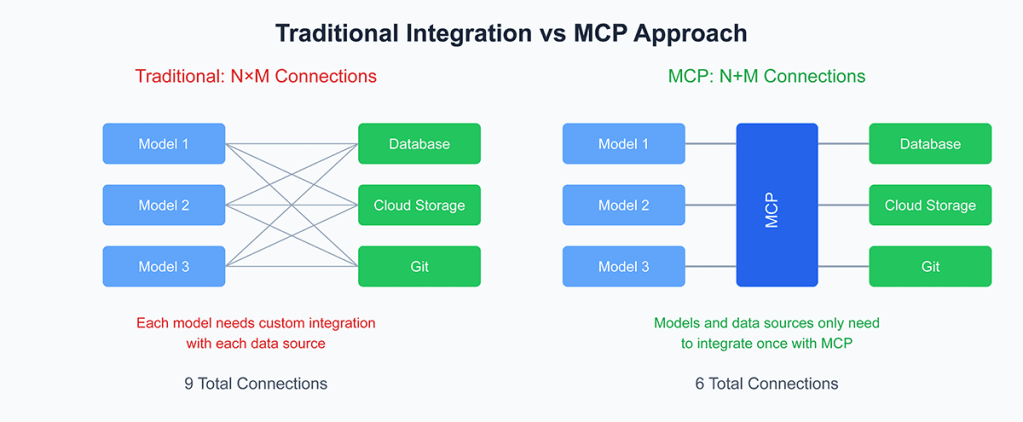

According to Vernon Keenan at salesforce dev, MCP is designed to solve a fundamental problem in enterprise AI adoption: the N×M integration issue—the challenge of connecting a multitude of AI applications with a wide variety of tools and data sources, each requiring custom integration. While ODBC standardized the way databases connected, MCP seeks to do the same for AI models, providing a consistent way for AI to interact with diverse environments like local file systems, cloud services, collaboration platforms, and enterprise applications.

ODBC consists of drivers (the translators) and a driver manager (which coordinates the translations). It’s widely supported by major database systems. MCP works in a similar way. The protocol aims to eliminate the need for developers to write redundant custom integration code every time they need to link a new tool or data source to an AI system.

The focus on local connections does create potential barriers for enterprise deployment. The need for scalability and distributed capabilities means that deploying MCP in cloud-native environments may be complex, especially where high-throughput operations are necessary. While Anthropic’s engineering team is actively working on extending MCP to support remote connections, this adds layers of complexity to security, deployment, and authentication. So currently MCP users can build local, small-scale solutions. As it evolves, the community’s involvement will play a key role in MCP’s development, particularly in shaping it into a production-ready tool that can handle enterprise-grade scalability.

We should approach MCP as a development tool, keeping a close watch on its evolution. If Anthropic can overcome the challenges of enterprise readiness, governance, and scalability, MCP could indeed become the ‘ODBC for AI’—a critical infrastructure layer that makes AI integration more accessible and manageable for everyone.

However, the question is still how to ensure data privacy if you build an app/server on top of MCP?? The LLM, from Anthropic or from openAI or from Groq, still need to reach and access data resources locally to analyze…