Tokenization is the process of breaking down text into smaller units (tokens) such as words, subwords, or characters. Different tokenization methods are used based on the task, language, and requirements of the model.

- Word-Based Tokenization

- Character-Based Tokenization, this increase computation cost a lot.

- Subword-Based Tokenization like Byte Pair Encoding (BPE), Wordpiece of BERT, SentencePiece.

- Sentence Tokenization, Byte-Level Tokenization, Morphological Tokenization, Neural Tokenization

- N-Gram Tokenization

Use this web app to test out: https://tiktokenizer.vercel.app/

Andrej talks about why not using unicode directly, it contains over 149k codes, and it keeps growing hence not ideal. There are UTF-8, 16 and 32. -8 is by far the most common.

worth comparing UTF-8 and ASCII, ASCII is a subset of UTF-8). ASCII is a character encoding standard that uses 7 bits to represent characters. It maps 128 unique characters, which include English alphabet, numbers, symbols, control characters such as new lines or backspaces. It’s limited to 128 characters.

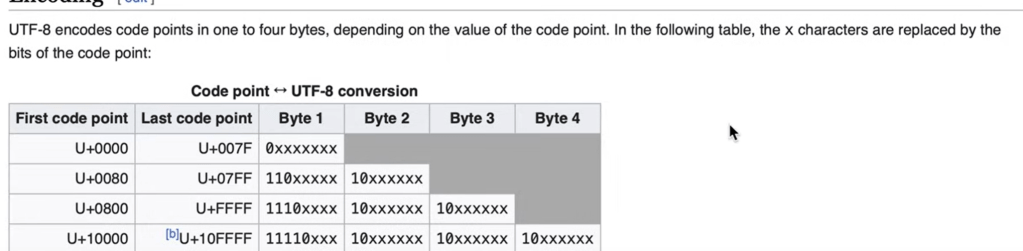

UTF-8 (Unicode Transformation Format – 8-bit) is a variable-length character encoding system for Unicode, which is designed to support virtually all characters and symbols from every language. It uses 1 to 4 bytes to represent a character, depending on its Unicode code point. Encoding Details:

- 4 bytes: For rare characters (e.g., emojis, less-used scripts).

- 1 byte (7 bits): For ASCII characters (0–127).

- 2 bytes: For characters in the range

128–2047(e.g., Latin-1 Supplement). - 3 bytes: For most commonly used characters (e.g., Chinese, Arabic, and other scripts).

Note one byte=8 bits, A single byte can represent 256 unique values (from 0 to 255 in decimal) because 28=2562^8 = 25628=256.

Let’s dive into BPE, yte Pair Encoding (BPE) is a subword tokenization technique widely used in natural language processing (NLP) to handle rare words and achieve an efficient trade-off between word-based and character-based tokenization. BPE is based on the idea of iteratively merging the most frequent pairs of symbols (characters or character sequences) into new symbols. BPE is foundational for models like GPT and BERT, where it efficiently handles large vocabularies and rare words. The benefits are that it reduce voc size works across different language and better generalization.

Training a tokenizer is a monumental task itself, in the end each token is assigned a unique integer ID. This mapping is used to convert text into numerical inputs for models.

For Highly Specialized Domain, Your text data contains highly specialized terms or symbols (e.g., legal, medical, financial, scientific, or robotics domains).

How is the token be assigned an integer? after tokenizer is trained, A vocabulary is a mapping of all possible tokens to unique integers. If a token is not in the vocabulary (unknown token), a special token like <unk> is assigned, which maps to a specific integer.

The major purpose of assigning integers to tokens, is that integers are used as indices for embedding layers or lookup tables.

Here’s an example of how integers corresponding to tokens are used to look up embeddings from an embedding table:

vocab = {"I": 0, "love": 1, "AI": 2, ".": 3, "<unk>": 4}

# Embedding table for 5 tokens (vocabulary size) and embedding dimension 3

embedding_table = torch.tensor([

[0.1, 0.2, 0.3], # Embedding for token "I" (index 0)

[0.4, 0.5, 0.6], # Embedding for token "love" (index 1)

[0.7, 0.8, 0.9], # Embedding for token "AI" (index 2)

[1.0, 1.1, 1.2], # Embedding for token "." (index 3)

[0.0, 0.0, 0.0] # Embedding for "<unk>" (index 4)

])

# Tokenized input

tokens = ["I", "love", "AI", "awesome"]

# Map tokens to integers

token_ids = [vocab.get(token, vocab["<unk>"]) for token in tokens]

print(token_ids) # Output: [0, 1, 2, 4]

# Convert token IDs to a tensor

token_ids_tensor = torch.tensor(token_ids)

# Look up embeddings

embeddings = embedding_table[token_ids_tensor]

print(embeddings)

# output is

tensor([

[0.1, 0.2, 0.3], # Embedding for "I"

[0.4, 0.5, 0.6], # Embedding for "love"

[0.7, 0.8, 0.9], # Embedding for "AI"

[0.0, 0.0, 0.0] # Embedding for "<unk>" (unknown token)

])