DeepSeek originated as a research initiative within High-Flyer, a Chinese quantitative hedge fund known for its AI-driven trading strategies. In April 2023, High-Flyer established DeepSeek as an independent entity dedicated to advancing artificial general intelligence (AGI), explicitly separating its research from the firm’s financial operations. Wikipedia

Since its inception, DeepSeek has developed several notable AI models. In May 2024, the company released DeepSeek-V2, which gained attention for its strong performance and cost-effectiveness, prompting competitive responses from major tech companies in China. Wikipedia

More recently, in January 2025, DeepSeek introduced the R1 model, capable of self-improvement without human supervision, further establishing its position in the AI research community.

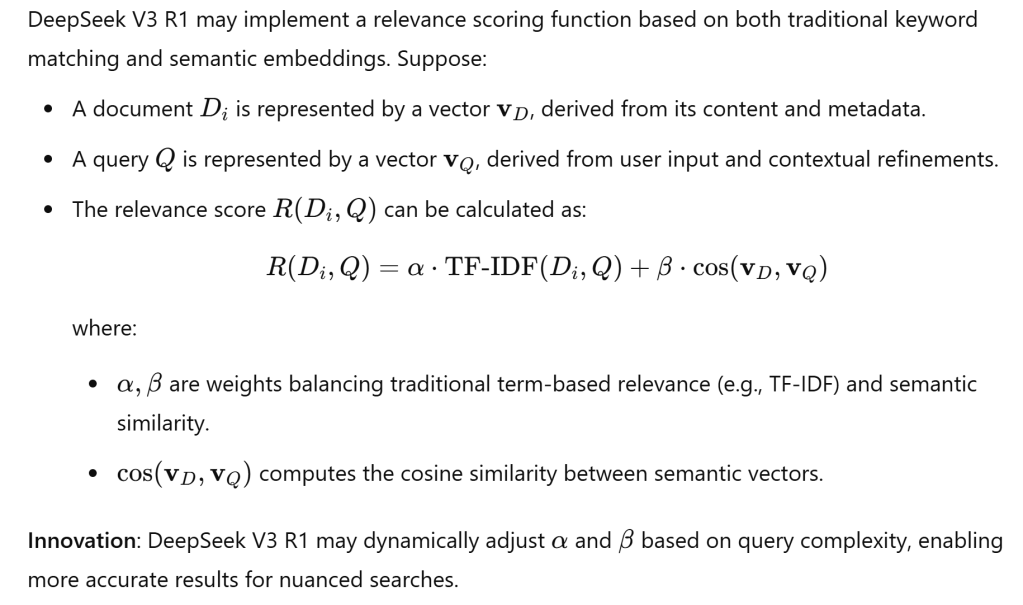

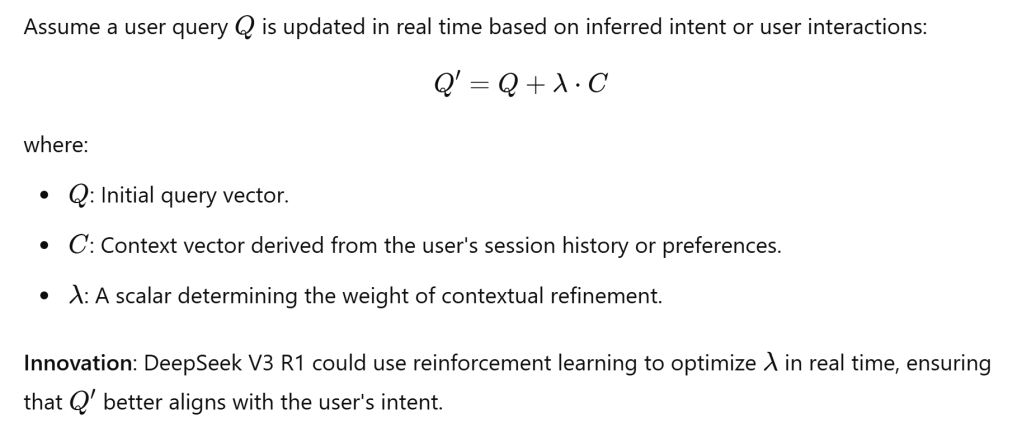

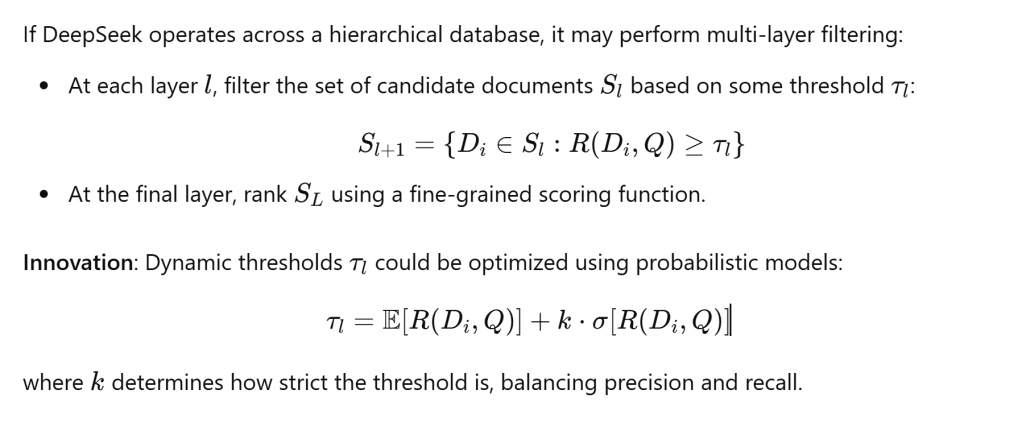

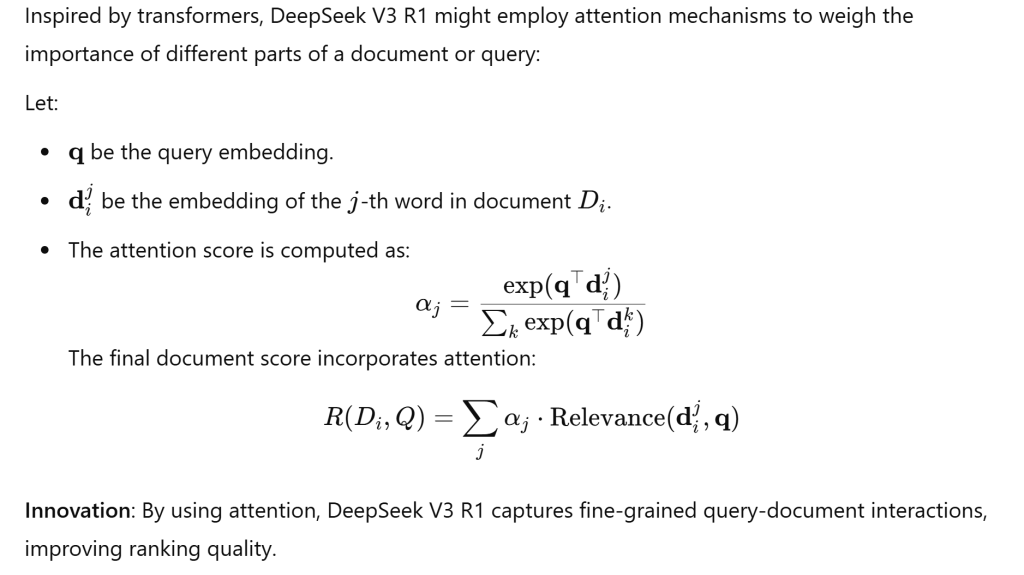

The key innovations by deepseek? DeepSeek V3 R1 likely innovates by integrating:

- Weighted relevance scoring

2. Contextual Query refinement

3. Hierarchical Search Space Optimization

4. Attention driven ranking

Another version from Claude 3.5 Sonnet: Searched web: deepseek r1 github code implementation reinforcement learning distillation

- GitHub – deepseek-ai/DeepSeek-R1

- DeepSeek-R1 — Training Language Models to reason through Reinforcement …

- Aakash Nain – DeepSeek-R1

- unsloth/DeepSeek-R1-Distill-Llama-8B-GGUF – Hugging Face

- DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via DeepSeek-R1 …

MoE Architecture Implementation

# Pseudo-code representation of MoE layer

class MoELayer:

def __init__(self, num_experts=16, hidden_size=4096):

self.experts = [Expert() for _ in range(num_experts)]

self.router = Router(hidden_size, num_experts)

def forward(self, x):

# Router selects top-k experts for each token

expert_weights = self.router(x) # Shape: [batch_size, seq_len, num_experts]

top_k_weights, top_k_indices = select_top_k(expert_weights, k=2)

# Only activate selected experts

output = sum([

weight * self.experts[idx](x)

for weight, idx in zip(top_k_weights, top_k_indices)

])

return outputReinforcement Learning Training

# Group Relative Policy Optimization implementation

def grpo_training_step(model, batch, optimizer):

# Generate multiple responses for each prompt

responses = []

for _ in range(group_size):

response = model.generate(

batch['prompt'],

max_length=32768, # Extended context window

temperature=0.6,

top_p=0.95

)

responses.append(response)

# Compute rewards for each response

rewards = [

compute_reward(response, batch['ground_truth'])

for response in responses

]

# Update policy using GRPO

loss = compute_grpo_loss(responses, rewards)

loss.backward()

optimizer.step()Reward Function Implementation

def compute_reward(response, ground_truth):

# Accuracy component

accuracy_reward = evaluate_correctness(response, ground_truth)

# Format adherence component

format_reward = check_formatting(response)

# Language consistency component (addressing mixing issues)

language_consistency = calculate_language_consistency(response)

return (

accuracy_reward +

format_reward * format_weight +

language_consistency * consistency_weight

)Training Pipeline Structure

class DeepSeekTrainingPipeline:

def train(self, base_model):

# Phase 1: Direct RL (DeepSeek-R1-Zero)

model_zero = self.train_with_rl(base_model)

# Phase 2: Cold Start Data

sft_data = load_reasoning_examples()

model_sft = self.supervised_fine_tune(base_model, sft_data)

# Phase 3: Advanced RL

model_r1 = self.train_with_rl(model_sft)

# Phase 4: Distillation

distilled_models = self.distill_to_sizes([

"1.5B", "7B", "8B", "14B", "32B", "70B"

], model_r1)Distillation Process

def distill_knowledge(teacher_model, student_model):

def training_step(batch):

# Teacher generates reasoning paths

with torch.no_grad():

teacher_outputs = teacher_model(

batch['input'],

temperature=0.6,

max_length=32768

)

# Student learns from teacher's outputs

student_outputs = student_model(batch['input'])

# Knowledge distillation loss

kd_loss = compute_distillation_loss(

student_outputs,

teacher_outputs,

temperature=2.0 # Higher temperature for softer probabilities

)

return kd_loss

Evaluation Setup

def evaluate_model(model, benchmark_data):

results = {}

# Configure generation parameters

gen_kwargs = {

"max_length": 32768,

"temperature": 0.6,

"top_p": 0.95,

"num_return_sequences": 64 # For pass@1 calculation

}

for benchmark, data in benchmark_data.items():

correct = 0

for sample in data:

responses = model.generate(sample['input'], **gen_kwargs)

# Take best of 64 responses

correct += any(is_correct(r, sample['answer']) for r in responses)

results[benchmark] = correct / len(data)

return resultsOverall, what generated by openAI or Claude 3.5 sonnet is lack of satisfaction. in the next blog, I will dive deep to the github codes and paper of deepseek and use deepseek itself to analyze it.