While Lagrange multipliers are a powerful technique for constrained optimization, there are several other methods used to solve optimization problems:

- Gradient Descent: An iterative first-order optimization algorithm for finding a local minimum of a differentiable function24. It’s commonly used in machine learning for training models by minimizing error functions.

- Newton’s Method: A second-order optimization technique that uses both first and second derivatives to find the minimum of a function more quickly than gradient descent in some cases.

- Quasi-Newton Methods: These methods approximate the Hessian matrix to achieve faster convergence than gradient descent while avoiding the computational cost of Newton’s method.

- Substitution Method: For simple problems with equality constraints, this method involves directly substituting the constraint into the objective function1.

- Branch and Bound: An algorithm for discrete and combinatorial optimization problems that partitions the solution space into smaller subsets and uses bounds to eliminate suboptimal solutions1.

- Interior Point Methods: These algorithms solve linear and nonlinear convex optimization problems by traversing the interior of the feasible region.

- Simplex Method: Specifically designed for linear programming problems, this algorithm moves along the edges of the feasible region to find the optimal solution.

- Stochastic Gradient Descent (SGD): A variant of gradient descent that uses a subset of data to update parameters, making it more efficient for large datasets4.

- Mini-Batch Gradient Descent: A compromise between batch gradient descent and SGD, using small batches of data to update parameters4.

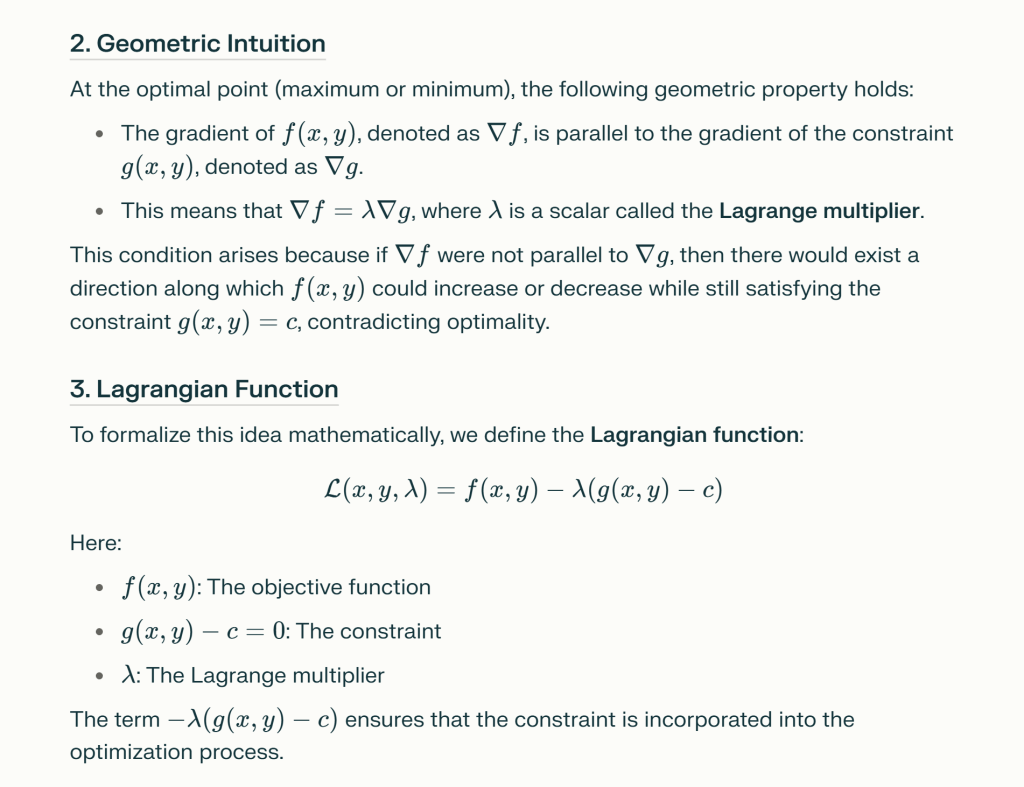

The genius of Lagrange who come up with Lagrange Multiplier to solve optimization problems: Lagrange’s method is rooted in geometric intuition. It recognizes that at the optimal point, the gradient of the objective function must be parallel to the gradient of the constraint function. This insight is captured by the equation

- The KKT conditions extend the Lagrange Multiplier method to handle inequality constraints, making them applicable to a broader class of optimization problems.

- Optimality Verification: They provide a way to verify whether a candidate solution is optimal. If a solution satisfies the KKT conditions (and certain regularity conditions hold), it is a strong candidate for being optimal.

- Foundation for Algorithms: Many numerical optimization algorithms, such as interior-point methods and sequential quadratic programming, are based on the KKT conditions.

- Duality Theory: The KKT conditions are closely related to duality in optimization, which provides insights into the structure of optimization problems and helps derive efficient solutions.

what if there are more constraints, how to apply Lagrange’s multiplier? When there are more constraints in a problem, you can still apply Lagrange’s multiplier method, but you need to extend it to handle multiple constraints. The process involves introducing a Lagrange multiplier for each constraint and then solving the resulting system of equations.

Here’s a step-by-step outline:

the Barra Risk Model, developed by Barra (now part of MSCI), uses concepts similar to Lagrange multipliers to optimize portfolios under various constraints. The model focuses on capturing and managing risk while aiming for a desired return in the context of portfolio management. Here’s how it relates to the idea of constraints and optimization:

Key Components of the Barra Risk Model:

- Risk Factors: The model identifies specific risk factors (e.g., market, size, value, momentum) that affect the returns of assets. These factors are used to estimate the expected risk and return of a portfolio.

- Optimization Framework: When constructing an optimal portfolio, the Barra Risk Model seeks to maximize expected returns for a given level of risk or minimize risk for a specified level of expected return. This is essentially a constrained optimization problem.

- Constraints: The model incorporates various constraints, such as:

- Budget Constraints: The total investment must equal a specified amount.

- Risk Constraints: Limitations on the portfolio’s exposure to certain risk factors.

- Regulatory Constraints: Restrictions on the allocation to specific asset classes or industries.

- Use of Lagrange Multipliers: While the Barra model itself does not explicitly detail the use of Lagrange multipliers, the underlying optimization algorithms can be thought of as analogous to the method of Lagrange multipliers. The model will effectively balance the trade-offs between expected return and risk (captured through the constraints) to arrive at an optimal portfolio allocation.

- Quadratic Programming: In practice, portfolio optimization using the Barra Risk Model often involves quadratic programming techniques, which can handle multiple constraints and objectives. This is conceptually similar to using Lagrange multipliers in that it seeks to find a solution that satisfies both the objective function and the constraints.