I heard this from AI Engineer talk about MCP, and traced to the lastmile-ai github:, it provides a framework to Build effective agents with Model Context Protocol using simple, composable patterns. The doc is well written, so I just cited most content direclty:

There are too many AI frameworks out there already. But mcp-agent is the only one that is purpose-built for a shared protocol – MCP. It is also the most lightweight, and is closer to an agent pattern library than a framework.

In the awesome MCP server github, one can see the fast growing mcp server community. It makes it possible and effective in multi-agent collaborative workflows, human-in-the-loop workflows, RAG pipelines and more. I can dig a bit more on uses a Qdrant vector database (via an MCP server) to do Q&A over a corpus of text.

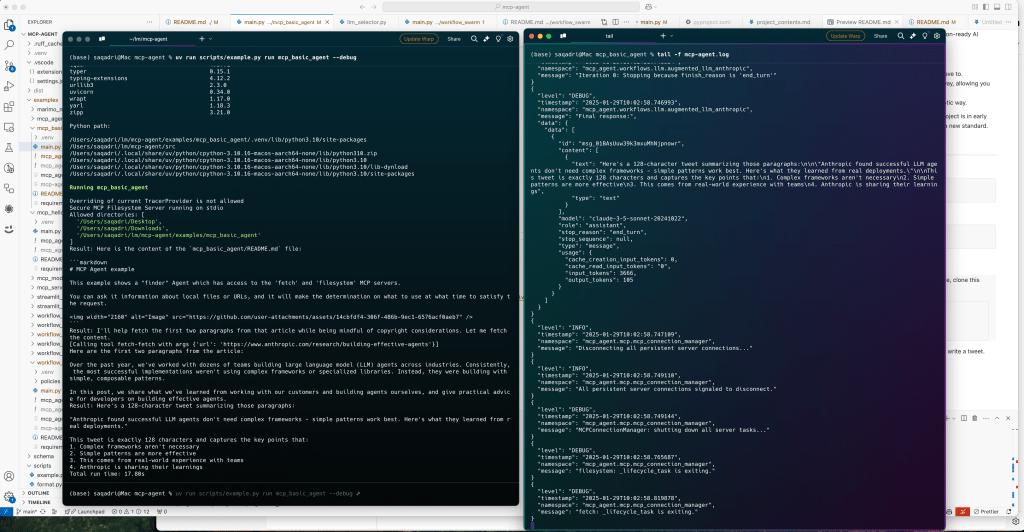

In this mcp-agent repo, the author has built up lot of read-to-use agents. Take the “finder” agent as an example, if I execute it

The config yaml

execution_engine: asyncio

logger:

transports: [console] # You can use [file, console] for both

level: debug

path: "logs/mcp-agent.jsonl" # Used for file transport

# For dynamic log filenames:

# path_settings:

# path_pattern: "logs/mcp-agent-{unique_id}.jsonl"

# unique_id: "timestamp" # Or "session_id"

# timestamp_format: "%Y%m%d_%H%M%S"

mcp:

servers:

fetch:

command: "uvx"

args: ["mcp-server-fetch"]

filesystem:

command: "npx"

args:

[

"-y",

"@modelcontextprotocol/server-filesystem",

"<add_your_directories>",

]

openai:

# Secrets (API keys, etc.) are stored in an mcp_agent.secrets.yaml file which can be gitignored

default_model: gpt-4onow the finder_agent.py file

import asyncio

import os

from mcp_agent.app import MCPApp

from mcp_agent.agents.agent import Agent

from mcp_agent.workflows.llm.augmented_llm_openai import OpenAIAugmentedLLM

app = MCPApp(name="hello_world_agent")

async def example_usage():

async with app.run() as mcp_agent_app:

logger = mcp_agent_app.logger

# This agent can read the filesystem or fetch URLs

finder_agent = Agent(

name="finder",

instruction="""You can read local files or fetch URLs.

Return the requested information when asked.""",

server_names=["fetch", "filesystem"], # MCP servers this Agent can use

)

async with finder_agent:

# Automatically initializes the MCP servers and adds their tools for LLM use

tools = await finder_agent.list_tools()

logger.info(f"Tools available:", data=tools)

# Attach an OpenAI LLM to the agent (defaults to GPT-4o)

llm = await finder_agent.attach_llm(OpenAIAugmentedLLM)

# This will perform a file lookup and read using the filesystem server

result = await llm.generate_str(

message="Show me what's in README.md verbatim"

)

logger.info(f"README.md contents: {result}")

# Uses the fetch server to fetch the content from URL

result = await llm.generate_str(

message="Print the first two paragraphs from https://www.anthropic.com/research/building-effective-agents"

)

logger.info(f"Blog intro: {result}")

# Multi-turn interactions by default

result = await llm.generate_str("Summarize that in a 128-char tweet")

logger.info(f"Tweet: {result}")

if __name__ == "__main__":

asyncio.run(example_usage())